Testing JavaScript with Amazon’s Mechanical Turk

This is the first in a short series of blog posts covering our approach to JavaScript testing.

The need for systematic JavaScript testing in the wild

Like most companies we test our JavaScript in-house thoroughly: we deploy to a stage setup that is identical to our production setup, then we run a suite of selenium tests on a mixture of real and virtual machines covering common browser–operating system pairings we see in the wild. Assuming it passes these tests, we then test on iOS and Android.

Most companies would stop there and push to production. We don’t.

There are a great many subtle effects and unexplained artifacts that occur in the wild that just don’t happen in a testing environment:

- People using strange hardware configurations,

- New, unusual or badly written plugins,

- Bad or intermittent connections,

- Hundreds of browser windows open simultaneously for days,

- the list continues.

Creating every possible combination of these in a test environment is not tractable. In this situation we take a statistical approach and take a sample from the population (users of the Internet).

Amazon’s Mechanical Turk

If you haven’t come across Amazon’s Mechanical Turk, or MTurk for short, we highly recommend researching it. Essentially it is a crowd-sourcing platform. You pay lots of people (Workers) small amounts of money to work on simple tasks (Human Intelligence Tasks or HITs).

Workers form our sample of real world users.

We have two types of HIT:

- A scripted task, where the worker follows a series of predefined steps mimicking our selenium setup, and

- A realistic task where the worker has to accomplish the sort of task they would typically tackle on a web page (information seeking, navigating etc.).

The JavaScript is executed in a debug mode that streams raw data, partially computed data and error messages back to us. These results allow us to troubleshoot data collection and provide ground truth for testing data processing.

Handling bias

Clearly the pool of MTurk workers are not strictly representative of our customer’s users. To handle this we split our tasks into two pools:

- Random HITs, where anyone can take part, and

- Stratified HITs, where we specify the worker’s browser and operating system.

HITs requiring a specific setup are common on MTurk as many of the tasks require esoteric applets, environments, or settings. Stratified HITs are assigned in the ratio that we see users (with a browser–operating system pair making a stratum).

Continuous testing

As well as being a good pre-production test, MTurk is fantastic for continuous testing. As new browsers are released, browsers and operating systems are updated and environments change, this is reflected in your diverse pool of workers. Changes in browsers and operating systems can automatically feed back into your strata for Stratified HITs. This lets you spot problems early and isn’t reliant on updating your in-house test setup.

How it fits together

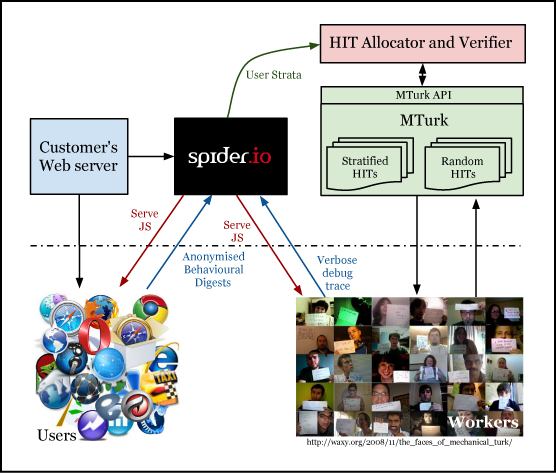

Pages are served from our Customer’s Web server’s with a link to the production spider.io JavaScript embedded. The production JavaScript is downloaded from our web servers and sends back an anonymised digest of the browser’s behaviour.

Periodically we update the HIT Allocator and Verifier’s list of common user environments. We allocate a mixture of stratified and random HITs, each of which requires either a scripted or natural task. A verbose debug trace is sent up from the worker’s browser allowing us to test and improve our code.

Cost vs value

There is no doubt using MTurk is an expensive way to test your JavaScript, however as an addition to in-house systematic testing we believe it pays dividends. We pay about $0.05 for each 30-second HIT. (The majority of our HITs actually take less than 30seconds.) This means we can get our script tested in 100 different real world settings for £3.

Put this in contrast with our test box, a £400 Mac mini running OS X with 3 Windows Virtual Machines giving us a total of 13 browser–operating system pairs, on one connection, one set of hardware and under constant load.

MTurk clearly has a place in the JavaScript testers tool box.