What’s in an IP address?

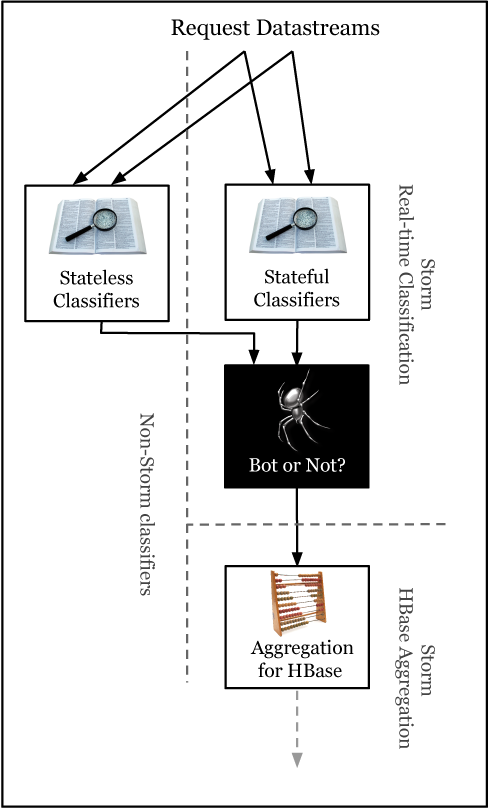

At spider.io our aim is to pick out all deviant website traffic. This involves trying to spot anomalies in enormous data streams in real time, where these data streams are informationally sparce and—what’s worse—much of what we see is not as it initially appears. This is because as much as we are trying to identify unwanted website activity, the perpetrators are also trying to evade our efforts. In this cat-and-mouse game almost every information source can be spoofed/masked/reverse-engineered/riddled with misdirection.

In our hunt for content-scrapers, spam injectors, click jackers, mouse jackers, cookie stuffers and phantom traffic, we look for visitor fingerprints, which are difficult to spoof—in terms of, for example, client-side behaviour, access patterns and consistency across the OSI layers, from the application layer to below the TCP layer.

In this post we consider a much simpler type of visitor fingerprint which is particularly difficult to fake: the requesting IP address. (NB The requesting IP address may not be the originating IP address, but that is for another post.) The IP address, the core routing key for the Internet, is present in every single HTTP request, and if any person who sends a request wants to receive the associated response, then they have to give their IP address.

In this post we consider four helpful clues provided to us by an IP address.

Geolocation

Firstly, given an IP address, we can say something about the real-world geographic location or geolocation of the requesting website visitor.

Whilst it’s well-known that many web services over the years have been geolocating IP addresses, it may come as a surprise to you to know just how cheap (free) and easy it is to get hold of reliable geolocation data for IPs. Consider Maxmind, for example. It offers a downloadable database of geolocation data, which allows IP address to be located in milliseconds, accurate to city level. This database is absolutely free. And to really help you get up and running, Maxmind even throw in an API in the language of your choice.

Whois

Okay, so we’ve got our first clue: IP geolocation. We can also say something about who or what is associated with any particular IP address. To do so we turn first to an aptly named service, Whois. Originally devised in the mid 1980s as a human-readable directory of IP address allocations for the internet, the Whois service is almost as old as the internet itself. To make a Whois query, we open a TCP connection to a Whois server. We send across the IP, and we get back such informational goodies as the company/organisation that owns the IP/range and associated contact information.

DNS

Next we turn to DNS, the phonebook of the internet. DNS records map domain names to IP addresses, but its actually trivially easy to do the reverse: i.e. look up a domain name from just an IP address. Whenever a domain name is registered for, say, spider.io, a public DNS record is added by the registrar for that domain, (indeed you wouldn’t be reading this page without the help of such a record). But in addition to that, most organisations register reverse DNS records, which point from the owner’s IPs back to the owning domain (enthusiastic readers may notice that this website is hosted on Amazon’s EC2).

So with a quick reverse DNS lookup, can we put a domain name to the IP address? Well, not so fast. For a start, the reverse DNS record often isn’t there. And remember what we said earlier about faking it? Well, reverse DNS is something that, unfortunately for us, goes on the list as ‘easily faked’. It’s often the case that the owner of the IP address is responsible for maintaining the reverse DNS entry, which is rather handy if, for instance, you want your homemade crawler to look like its registered to googlebot.com. With that in mind, a common way to verify a reverse DNS entry is to use the result (the domain name referenced by the reverse lookup) to perform a subsequent forward DNS lookup (atechnique called forward confirmed reverse DNS lookup), and if the forward lookup brings you back to the IP in question, you can be satisfied that this is a valid reverse DNS record.

DNSBLs

DNS blacklists, or DNSBLs, are another DNS extension. DNSBLs like this, for example, contain IP addresses flagged for deviant/nefarious activity—spamming, in particular. By querying various DNSBLs we may put together an online character reference for the IP address to the extent that we may be able to identify known TOR exit-point, or a machines that have been flagged as scanning drones.

So from a single IP address—without even considering behaviour associated with this IP—we can already start to build a compelling picture of who our mystery visitor might be and why they have visited.